Big Data Engineer Training in Sydney

Simplilearn and IBM have come together in this exciting collaboration for our big data engineer training in Sydney. This top training program teaches aspiring big data engineers the essentials that will help them jump start their careers. From AWS services to Hadoop frameworks, students learn the tools needed to excel when they enroll in our big data engineer training in Sydney.

Application closes on

3 Jan, 2025Program duration

7 monthsLearning Format

Live, Online, Interactive

Why Join this Program

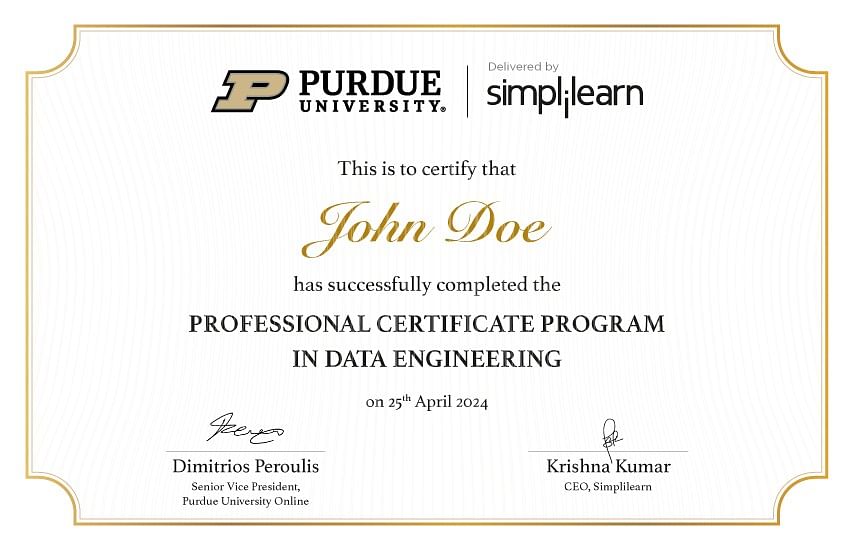

Earn an Elite Certificate

Earn an Elite CertificateJoint program completion certificate from Purdue University Online and Simplilearn

Joint program completion certificate from Purdue University Online and Simplilearn

Leverage the Purdue Edge

Leverage the Purdue EdgeGain access to Purdue’s Alumni Association membership on program completion

Certification Aligned

Certification AlignedLearn courses that are aligned with AWS, Microsoft, and Snowflake certifications

Career Assistance

Career AssistanceBuild your resume and highlight your profile to recruiters with our career assistance services.

Data Engineering Course Overview

The data engineer course in Sydney primarily focuses on using the Hadoop framework for distributed processing, Spark for large-scale processing of data, and AWS, along with Azure cloud infrastructures for processing data. On completing this data engineer course in Sydney, you equip yourself for a career in data engineering.

Key Features

- Program completion certificate from Purdue University Online and Simplilearn

- Access to Purdue’s Alumni Association membership on program completion

- 150+ hours of core curriculum delivered in live online classes by industry experts

- Capstone from 3 domains and 14+ projects with Industry datasets from YouTube, Glassdoor, Facebook, etc.

- Aligned with Microsoft DP 203, AWS Certified Data Engineer - Associate, and SnowPro® Core Certification

- Live sessions on the latest AI trends, such as generative AI, prompt engineering, explainable AI, and more

- Case studies on top companies like Uber, Flipkart, FedEx, Nvidia, RBS and Netflix

- Learn through 20+ tools to gain practical experience

- 8X higher live interaction in live Data Engineering online classes by industry experts

Data Engineering Course Advantage

This data engineering program equips you with the latest tools (Python, SQL, Cloud, Big Data) to tackle complex data challenges. Master data wrangling, build data pipelines, and gain Big Data expertise (Hadoop, Spark) through this program.

Partnering with Purdue University

- Receive a joint Purdue-Simplilearn certificate

- An opportunity to get Purdue’s Alumni Association membership

Data Engineering Course Details

Fast-track your career as a data engineering professional with our course. The curriculum covers big data and data engineering concepts, the Hadoop ecosystem, Apache Python basics, AWS EMR, Quicksight, Sagemaker, the AWS cloud platform, and Azure services.

Learning Path

Get started with the Data Engineering certification course in partnership with Purdue University and explore the basics of the program. Kick-start your journey with preparatory courses on Data Engineering with Scala and Hadoop, and Big Data for Data Engineering.

- Procedural and OOP understanding

- Python and IDE installation

- Jupyter Notebook usage mastery

- Implementing identifiers, indentations, comments

- Python data types, operators, string identification

- Types of Python loops comprehension

- Variable scope in functions exploration

- OOP explanation and characteristics

- Databases and their interconnections.

- Popular query tools and handle SQL commands.

- Transactions, table creation, and utilizing views.

- Execute stored procedures for complex operations.

- SQL functions, including those related to strings, mathematics, date and time, and pattern matching.

- Functions related to user access control to ensure the security of databases.

- Understanding MongoDB

- Document structure and schema design

- Data modeling for scalability

- CRUD operations and querying

- Indexing and performance optimization

- Security and access control

- Data management and processing

- Integration and scalability

- Developing data pipelines

- Monitoring and performance optimization

- Hadoop ecosystem and optimization

- Ingest data using Sqoop, Flume, and Kafka

- Partitioning, bucketing, and indexing in Hive

- RDD in Apache Spark

- Process real-time streaming data

- DataFrame operations in Spark using SQL queries

- User-Defined Functions (UDF) and User-Defined Attribute

- Functions (UDAF) in Spark

-

Understand the fundamental concepts of the AWS platform and cloud

-

computing

-

Identify AWS concepts, terminologies, benefits, and deployment

-

options to meet business requirements

-

Identify deployment and network options in AWS

-

- Data engineering fundamentals

- Data ingestion and transformation

- Orchestration of data pipelines

- Data store management

- Data cataloging systems

- Data lifecycle management

- Design data models and schema evolution

- Automate data processing by using AWS services

- Maintain and monitor data pipelines

- Data Security and Governance

- authentication mechanisms

- authorization mechanisms

- data encryption and masking

- Prepare logs for audit

- data privacy and governance

-

Describe Azure storage and create Azure web apps

-

Deploy databases in Azure

-

Understand Azure AD, cloud computing, Azure, and Azure

-

subscriptions

-

Create and configure VMs in Microsoft Azure

-

-

Implement data storage solutions using Azure SQL Database, Azure

-

Synapse Analytics, Azure Data Lake Storage, Azure Data Factory,

-

Azure Stream Analytics, Azure Databricks services

-

Develop batch processing and streaming solutions

-

Monitor Data Storage and Data Processing

-

Optimize Azure Data Solutions

-

By the end of the course, you can showcase your newly acquired skills in a hands-on, industry-relevant capstone project that combines everything you learned in the program into one portfolio-worthy example. You can work on 3 projects to make your practice more relevant.

Electives:

- Attend live generative AI masterclasses and learn how to leverage it to streamline workflows and enhance efficiency.

- Conducted by industry experts, these masterclasses delve deep into AI-powered creativity.

- Snowflake structure

- Overview and Architecture

- Data protection features

- Cloning

- Time travel

- Metadata and caching in Snowflake

- Query performance

- Data Loading

The GCP Fundamentals course will teach you to analyze and deploy infrastructure components such as networks, storage systems, and application services in the Google Cloud Platform. This course covers IAM, networking, and cloud storage and introduces you to the flexible infrastructure and platform services provided by Google Cloud Platform.

This course introduces Source Code Management (SCM), focusing on Git and GitHub. Learners will understand the importance of SCM in the DevOps lifecycle and gain hands-on experience with Git commands, GitHub features, and common workflows such as forking, branching, and merging. By the end, participants will be equipped to efficiently manage and collaborate on code repositories using Git and GitHub in real-world scenarios.

Contact Us

1-800-982-536

( Toll Free )

12+ Skills Covered

- Real Time Data Processing

- Data Pipelining

- Big Data Analytics

- Data Visualization

- Provisioning data storage services

- Apache Hadoop

- Ingesting Streaming and Batch Data

- Transforming Data

- Implementing Security Requirements

- Data Protection

- Encryption Techniques

- Data Governance and Compliance Controls

Industry Projects

- Project 1

Market Basket Analysis Using Instacart

Conduct Market analysis for online grocery delivery and pick-up service utilizing a data set of a large sample size.

- Project 2

YouTube Video Analysis

Measure user interactions to rank the top trending videos on YouTube and determine actionable insights.

- Project 3

Data Visualization Using Azure Synapse

Build visualization for the sales data using a dashboard to estimate the demand for all locations. This will be used by a retailer to make a decision on where to open a new branch.

- Project 4

Data Ingestion EndtoEnd Pipeline

Upload data to Azure Data Lake Storage and save large data sets to Delta Lake of Azure Databricks so that files can be accessed at any time.

- Project 5

Server Monitoring with AWS

Monitor the performance of an EC2 instance to gather data from all parts and understand debugging failure.

- Project 6

ECommerce Analytics

Analyze the sales data to derive significant region-wise insights and include details on the product evaluation.

Disclaimer - The projects have been built leveraging real publicly available datasets from organizations.

Program Advisors and Trainers

Program Advisors

Aly El Gamal

Assistant Professor, Purdue UniversityAly El Gamal has a Ph.D. in Electrical and Computer Engineering and M.S. in Mathematics from the University of Illinois. Dr. El Gamal specializes in the areas of information theory and machine learning and has received multiple commendations for his research and teaching expertise.

Program Trainers

Wyatt Frelot

20+ years of experienceSenior Technical Instructor

Armando Galeana

20+ years of experienceFounder and CEO

Makanday Shukla

15+ years of experienceEnterprise Architect

Amit Singh

12+ years of experienceTechnical Architect

Batch Profile

The Professional Certificate Program in Data Engineering caters to working professionals across different industries. Learner diversity adds richness to class discussions.

- The class consists of learners from excellent organizations and diverse industriesIndustryInformation Technology - 40%Software Product - 15%BFSI - 15%Manufacturing - 15%Others - 15%Companies

Alumni Review

I’m Christian Lopez, a Cognitive Neuroscience graduate from UC San Diego, now working as a data scientist at a leading Las Vegas bank. With skills in data wrangling, machine learning, and big data, I joined Purdue's PGP in Data Engineering via Simplilearn to boost my career. The program was top-notch, and I enjoyed every module. It provided me with new projects at work and a 10% salary hike. My goal is to specialize in AWS Data Analytics and transition into cloud engineering.

Christian Lopez

Data Scientist - Data Strategy & Governance

What other learners are saying

Admission Details

Application Process

Candidates can apply to this Data Engineering course in 3 steps. Selected candidates receive an admission offer which is accepted by admission fee payment.

Submit Application

Tell us why you want to take this Data Engineering course

Reserve Your Seat

An admission panel will shortlist candidates based on their application

Start Learning

Selected candidates can begin the Data Engineering course within 1-2 weeks

Eligibility Criteria

For admission to this Data Engineering course, candidates should have:

Admission Fee & Financing

The admission fee for this Data Engineering course is A$ 2,690. It covers applicable program charges and the Purdue Alumni Association membership fee.

Financing Options

We are dedicated to making our programs accessible. We are committed to helping you find a way to budget for this program and offer a variety of financing options to make it more economical.

Total Program Fee

A$ 2,690

Pay In Installments, as low as

A$ 269/mo

You can pay monthly installments for Post Graduate Programs using Splitit payment option with 0% interest and no hidden fees.

Apply Now

Program Benefits

- Program Certificate from Purdue Online and Simplilearn

- Access to Purdue’s Alumni Association membership

- Courses aligned with AWS, Azure, and Snowflake certification

- Case studies on top firms like Uber, Nvidia, RBS and Netflix

- Active recruiters include Google, Microsoft, Amazon and more

Program Cohorts

Next Cohort

Date

Time

Batch Type

- Program Induction

3 Jan, 2025

01:00 AEDT

- Regular Classes

23 Feb, 2025 - 1 Sep, 2025

01:00 - 05:00 AEDT

WeekendSuM

Data Engineering Course FAQs

For admission to this Post Graduate Program in Data Engineering in Sydney, candidates need:

- A bachelor’s degree with an average of 50% or higher marks

- 2+ years of work experience (preferred)

- Basic understanding of object oriented programming (preferred)

The admission process consists of three simple steps:

- All interested candidates are required to apply through the online application form

- An admission panel will shortlist the candidates based on their application

- An offer of admission will be made to the selected candidates and is accepted by the candidates by paying the program fee

To ensure money is not a barrier in the path of learning, we offer various financing options to help ensure that this Post Graduate Program in Data Engineering in Sydney is financially manageable. Please refer to our “Admissions Fee and Financing” section for more details.

As a part of this Post Graduate Program, in collaboration with IBM, you will receive the following:

- Purdue Post Graduate Program certification

- Industry recognized certificates from IBM and Simplilearn

- Purdue Alumni Association membership

- Lifetime access to all core elearning content created by Simplilearn

- $1,200 worth of IBM Cloud credits for your personal use

Upon successful completion of this program in Sydney, you will be awarded a Post Graduate Program in Data Engineering certification by Purdue University. You will also receive industry-recognized certificates from IBM and Simplilearn for the courses on the learning path.

The average salary of a Big data engineer in Sydney is AU$99,995 per year. However, if you gain the necessary skills and knowledge about Big data engineering by enrolling in data engineering training in Sydney, the average salary will increase.

Some of the major companies in Sydney, looking to hire professionals for the post of Big data engineer are Accenture, D TEX Systems, IBM, The Weir Group PLC, Luxoft, etc. If you hold a certification in data engineering training in Sydney, you will have excellent opportunities to work as a Big data engineer in any of the above companies.

Oil, gas, and utilities are major revenue generators for Sydney’s economy. Apart from this, there are also other industries like commerce, transport, retailing, tourism, finance, that construct the economy of Sydney. Notably, each of these industries wants to leverage the services of a Big data engineer who will develop, maintain, test, and evaluate the big data of these industries. If you have completed a data engineering course in Sydney, have a promising career in this field.

To become a Big data engineer in Sydney, you need to have a bachelor's degree in any technical subject like computer engineering or information technology. Few internships with the data engineering teams of companies are a bonus for your resume. A data engineering course in Sydney and a subsequent certification will seal the deal for you to land a dream job in any company of your choice.

There are numerous universities and institutes across Sydney offering Big data engineering courses. But before enrolling yourself in a data engineering course in Sydney, it is crucial to do a background check of the institute you choose. Experience of the teaching faculty, how the courses are structured, areas of the subject they cover, duration of the one, if duration offered is enough to gain ample knowledge, do they provide placement assistance; these are some of the questions you need to ask. Numerous online platforms are springing up with compelling courses as well.

Today, small and large companies depend on data to help answer important business questions. Data engineering plays a crucial role in supporting this process, making it possible for others to inspect the data available reliably making them important assets to organizations, earning lucrative salaries worldwide. Here are some average yearly estimates:

- India: INR 10.5 Lakhs

- US: USD 131,713

- Canada: CAD 98,699

- UK: GBP 52,142

- Australia:AUD 118,000

Yes, data engineers are expected to have basic programming skills in Java, Python, R or any other language.

After completing this program, you will be eligible for the Purdue University Alumni Association membership, giving you access to resources and networking opportunities even after earning your certificate.

We offer 24/7 support through chat for any urgent issues. For other queries, we have a dedicated team that offers email assistance and on-request callbacks.

Find Data Science & Business Analytics Programs in Sydney

Professional Certificate in Data Science and Generative AIData ScientistData AnalystTableau Desktop Specialist Certification TrainingApplied Data Science with PythonPL-300 Microsoft Power BI Certification Training- Acknowledgement

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, OPM3 and the PMI ATP seal are the registered marks of the Project Management Institute, Inc.